Update README.md conda env name fix

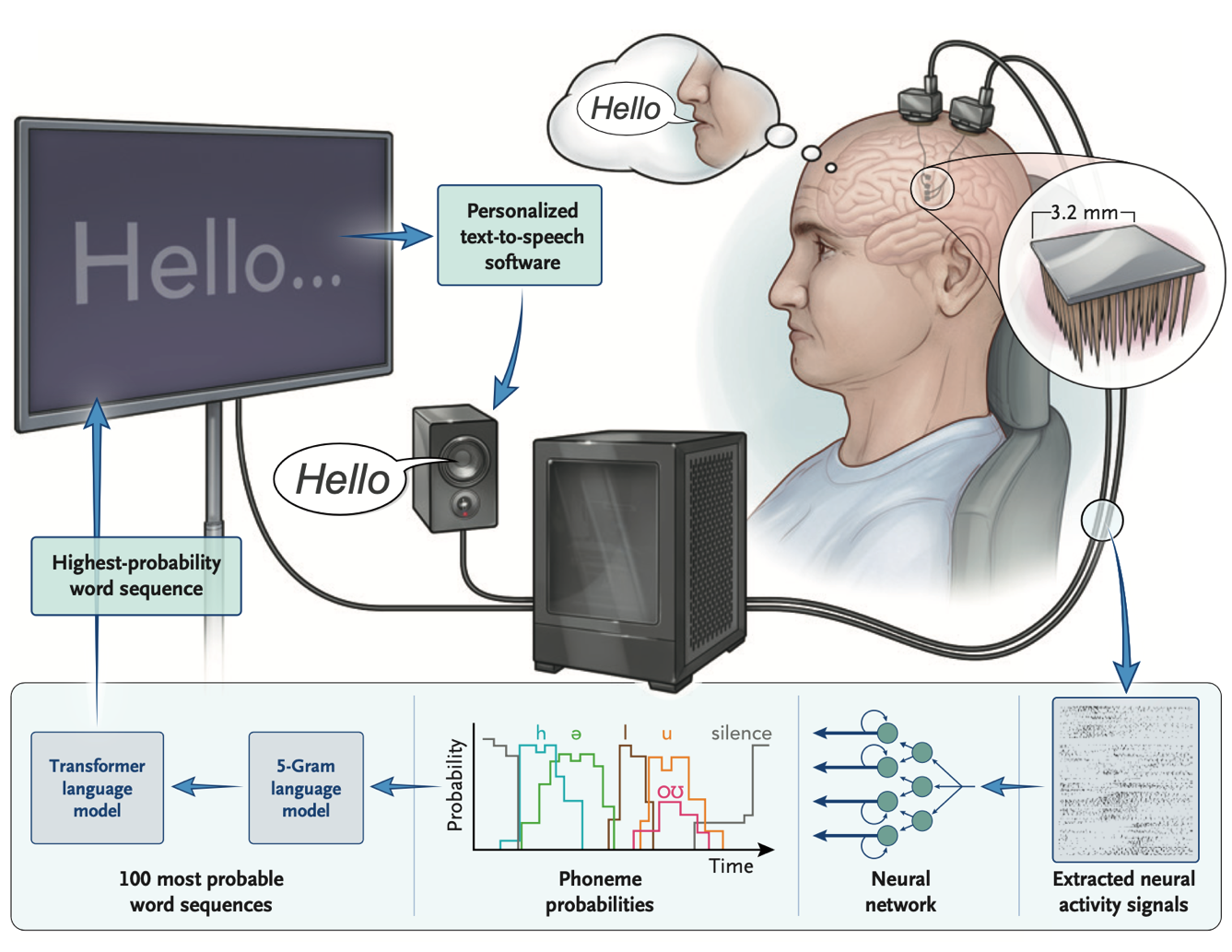

An Accurate and Rapidly Calibrating Speech Neuroprosthesis

The New England Journal of Medicine (2024)

Nicholas S. Card, Maitreyee Wairagkar, Carrina Iacobacci, Xianda Hou, Tyler Singer-Clark, Francis R. Willett, Erin M. Kunz, Chaofei Fan, Maryam Vahdati Nia, Darrel R. Deo, Aparna Srinivasan, Eun Young Choi, Matthew F. Glasser, Leigh R. Hochberg, Jaimie M. Henderson, Kiarash Shahlaie, Sergey D. Stavisky*, and David M. Brandman*.

* denotes co-senior authors

Overview

This repository contains the code and data necessary to reproduce the results of the paper "An Accurate and Rapidly Calibrating Speech Neuroprosthesis" by Card et al. (2024), N Eng J Med.

The code is written in Python, and the data can be downloaded from Dryad, here. Please download this data and place it in the data directory before running the code. Be sure to unzip t15_copyTask_neuralData.zip and t15_pretrained_rnn_baseline.zip.

The code is organized into five main directories: utils, analyses, data, model_training, and language_model:

- The

utilsdirectory contains utility functions used throughout the code. - The

analysesdirectory contains the code necessary to reproduce results shown in the main text and supplemental appendix. - The

datadirectory contains the data necessary to reproduce the results in the paper. Download it from Dryad using the link above and place it in this directory. - The

model_trainingdirectory contains the code necessary to train and evaluate the brain-to-text model. See the README.md in that folder for more detailed instructions. - The

language_modeldirectory contains the ngram language model implementation and a pretrained 1gram language model. Pretrained 3gram and 5gram language models can be downloaded here (languageModel.tar.gzandlanguageModel_5gram.tar.gz). Seelanguage_model/README.mdfor more information.

Data

The data used in this repository consists of various datasets for recreating figures and training/evaluating the brain-to-text model:

t15_copyTask.pkl: This file contains the online Copy Task results required for generating Figure 2.t15_personalUse.pkl: This file contains the Conversation Mode data required for generating Figure 4.t15_copyTask_neuralData.zip: This dataset contains the neural data for the Copy Task.- There are more than 11,300 sentences from 45 sessions spanning 20 months. Each trial of data includes:

- The session date, block number, and trial number

- 512 neural features (2 features [-4.5 RMS threshold crossings and spike band power] per electrode, 256 electrodes), binned at 20 ms resolution. The data were recorded from the speech motor cortex via four high-density microelectrode arrays (64 electrodes each). The 512 features are ordered as follows in all data files:

- 0-64: ventral 6v threshold crossings

- 65-128: area 4 threshold crossings

- 129-192: 55b threshold crossings

- 193-256: dorsal 6v threshold crossings

- 257-320: ventral 6v spike band power

- 321-384: area 4 spike band power

- 385-448: 55b spike band power

- 449-512: dorsal 6v spike band power

- The ground truth sentence label

- The ground truth phoneme sequence label

- The data is split into training, validation, and test sets. The test set does not include ground truth sentence or phoneme labels.

- Data for each session/split is stored in

.hdf5files. An example of how to load this data using the Pythonh5pylibrary is provided in themodel_training/evaluate_model_helpers.pyfile in theload_h5py_file()function. - Each block of data contains sentences drawn from a range of corpuses (Switchboard, OpenWebText2, a 50-word corpus, a custom frequent-word corpus, and a corpus of random word sequences). Furthermore, the majority of the data is during attempted vocalized speaking, but some of it is during attempted silent speaking.

- There are more than 11,300 sentences from 45 sessions spanning 20 months. Each trial of data includes:

t15_pretrained_rnn_baseline.zip: This dataset contains the pretrained RNN baseline model checkpoint and args. An example of how to load this model and use it for inference is provided in themodel_training/evaluate_model.pyfile.

Please download these datasets from Dryad and place them in the data directory. Be sure to unzip both datasets before running the code.

Dependencies

- The code has only been tested on Ubuntu 22.04 with two NVIDIA RTX 4090 GPUs.

- We recommend using a conda environment to manage the dependencies. To install miniconda, follow the instructions here.

- Redis is required for communication between python processes. To install redis on Ubuntu:

- https://redis.io/docs/getting-started/installation/install-redis-on-linux/

- In terminal:

curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list sudo apt-get update sudo apt-get install redis - Turn off autorestarting for the redis server in terminal:

sudo systemctl disable redis-server

CMake >= 3.14andgcc >= 10.1are required for the ngram language model installation. You can install these on linux withsudo apt-get install cmakeandsudo apt-get install build-essential.

Python environment setup for model training and evaluation

To create a conda environment with the necessary dependencies, run the following command from the root directory of this repository:

./setup.sh

Verify it worked by activating the conda environment with the command conda activate b2txt25.

Python environment setup for ngram language model and OPT rescoring

We use an ngram language model plus rescoring via the Facebook OPT 6.7b LLM. A pretrained 1gram language model is included in this repository at language_model/pretrained_language_models/openwebtext_1gram_lm_sil. Pretrained 3gram and 5gram language models are available for download here (languageModel.tar.gz and languageModel_5gram.tar.gz). Note that the 3gram model requires ~60GB of RAM, and the 5gram model requires ~300GB of RAM. Furthermore, OPT 6.7b requires a GPU with at least ~12.4 GB of VRAM to load for inference.

Our Kaldi-based ngram implementation requires a different version of torch than our model training pipeline, so running the ngram language models requires an additional seperate python conda environment. To create this conda environment, run the following command from the root directory of this repository. For more detailed instructions, see the README.md in the language_model subdirectory.

./setup_lm.sh

Verify it worked by activating the conda environment with the command conda activate b2txt25_lm.